Intro:

Open Orchestra was conceived as a system able to provide musicians with a high-fidelity experience of ensemble rehearsal or performance, combined with the convenience and flexibility of solo study.

Challenge:

Build a software system capable of remotely streaming ultra-high-quality, lossless audio and video in tandem across three screens which can manage user sessions composed of repertoire playback, recordings, interactions with professors, and learning modules via a fourth, touch-enabled screen.

Work:

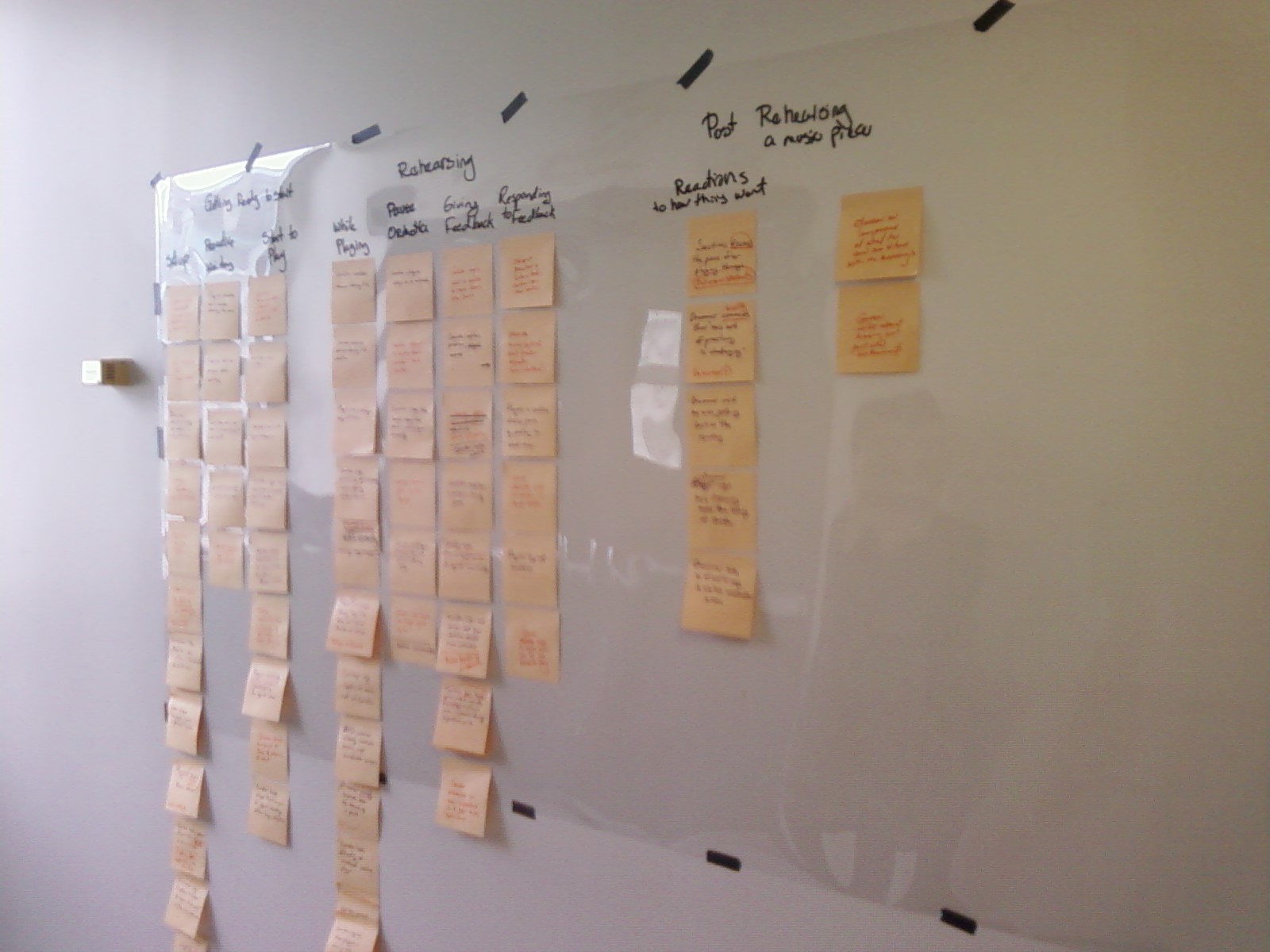

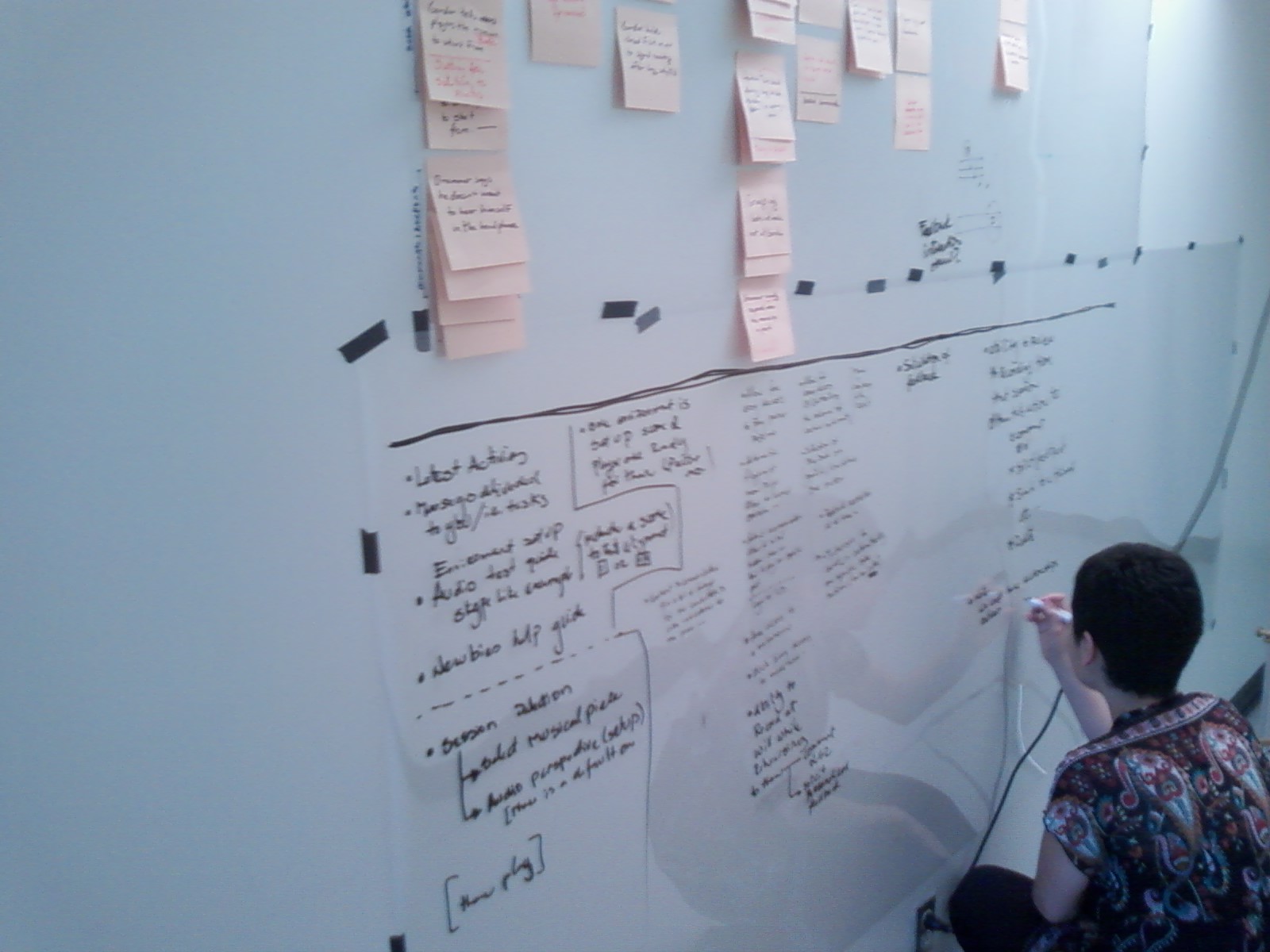

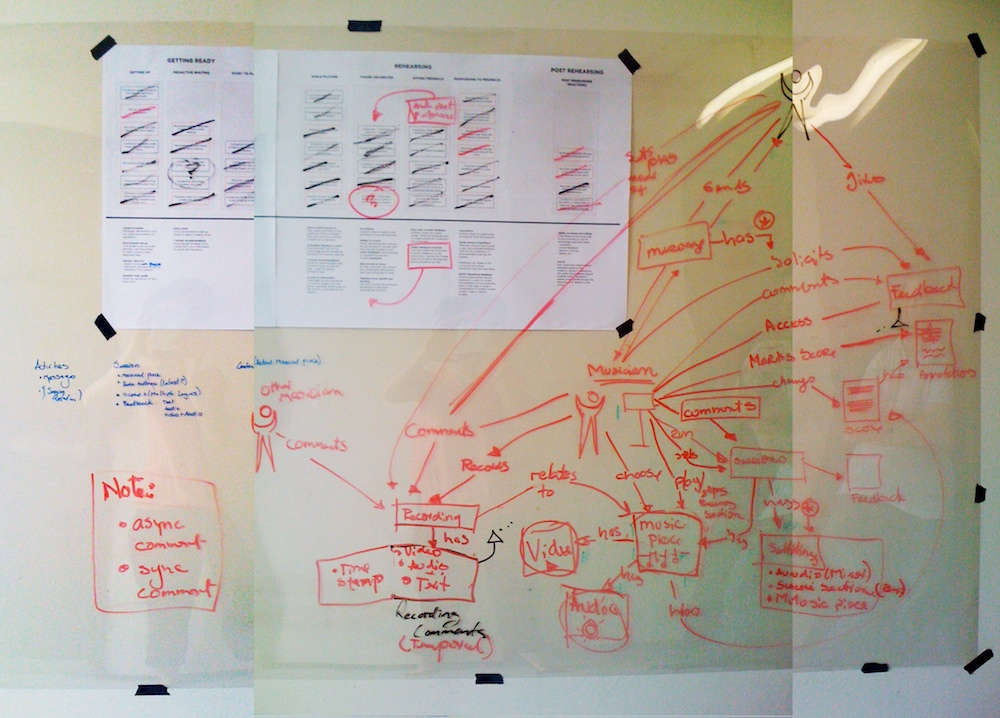

In perhaps my most difficult technical project to date, I prototyped and built a complete software system, from scratch, that was able to synchronize and play three remote lossless HD video streams and up to ten remote audio streams simultaneously via RTMP over CANARIE’s 10Gbps fiber research network. Teams of researchers at McGill University and University of British Columbia recorded the video and audio repertoire. Another researcher built the streaming content media server, and my supervisor conducted extensive user research and allowed me to participate in creating personas, mental models, and information architectures which drove my implementation of the project. I was also responsible for rapidly creating both low- and high-fidelity prototypes and iterating on these designs through formal and informal user testing alongside my supervisor.

After completing these initial design stages, I architected and implemented a MySQL and PHP-based backend orchestrated by a Doctrine ORM installation for object and content management. For the front-end application that would run on each physical unit, I built an Adobe AIR-based client to run on a custom-built computer rig composed of three 32” monitors in a panorama setup, one touchscreen, and a professional-grade microphone and mixer (with no physical keyboard or mouse). The application allowed users to write and manage messages to and from other users and professors and interact with music in the repertoire of recorded jazz, baroque, and opera performances. The touch interface rendered a contextual overlay onto the sheet music from MusicXML entries that allowed the user to set recording parameters, playback conductor’s notes, and jump to different locations in the playback. The application also allowed users to change the mixer settings of the 6-10 streaming audio tracks and save their settings for later use or to send to other users.

Roles & Credits:

Adriana Olmos served as the user experience researcher and designer (and a passionate mentor still to this day!). Credit for the video on this page goes to her, as does the project’s overall UX (interaction design, visual design, user testing leadership, and more).

I helped Adriana throughout the UX research and design phases through her mentorship and training, and my primary role was as a rapid prototyper and full-stack developer (solely responsible for development of the entire platform).